References

Barnier, B., Siefridt, L., and Marchesiello, P. (1995). Thermal forcing for a global ocean circulation model using a three-year climatology of ECMWF analyses. Journal of Marine Systems, 6(4), 363-380, https://doi.org/10.1016/0924-7963(94)00034-9

Barnier, B. (1998). Forcing the ocean. In “Ocean Modeling and Parameterization”, Editors: E. P. Chassignet and J. Verron, Eds., Kluwer Academic, 45-80.

Bell, M.J., Lefèbvre, M., Le Traon, P.-Y., Smith, N., Wilmer-Becker, K. (2009). GODAE the global ocean data assimilation experiment. Oceanography, 22, 14-21, https://doi.org/10.5670/oceanog.2009.62

Bellingham, J. (2009). Platforms: Autonomous Underwater Vehicles. In “Encyclopedia of Ocean Sciences”, Editors-in-Chief: J. K. Cochran, H. Bokuniewicz, P. Yager, ISBN: 9780128130827, doi:10.1016/B978-012374473- 9.00730-X

Bourlès, B., Lumpkin, R., McPhaden, M.J., Hernandez, F., Nobre, P., Campos, E., Yu, L. Planton, S., Busalacchi, A., Moura, A.D., Servain, J., and Trotte, Y. (2008). The PIRATA program: History, accomplishments, and future directions. Bulletin of the American Meteorological Society, 89, 1111-1125, https://doi.org/10.1175/2008BAMS2462.1

Boyer, T.P., Baranova, O.K., Coleman, C., Garcia, H.E., Grodsky, A., Locarnini, R.A., Mishonov, A.V., Paver, C.R., Reagan, J.R., Seidov, D., Smolyar, I.V., Weathers, K.W., Zweng, M.M. (2019). World Ocean Database 2018. A. V. Mishonov, Technical Editor, NOAA Atlas NESDIS 87.

Bouttier, F., and Courtier, P. (2002). Data assimilation concepts and methods. Meteorological training course lecture series, ECMWF, 59. Available at https://www.ecmwf.int/en/elibrary/16928-data-assimilation-concepts-and-methods

Capet, A., Fernández, V., She, J., Dabrowski, T., Umgiesser, G., Staneva, J., Mészáros, L., Campuzano, F., Ursella, L., Nolan, G., El Serafy, G. (2020), Operational Modeling Capacity in European Seas - An EuroGOOS Perspective and Recommendations for Improvement. Frontiers in Marine Science, 7:129, https://doi.org/10.3389/fmars.2020.00129

Carrassi, A., Bocquet, M., Bertino, L., Evensen, G. (2018). Data assimilation in the geosciences: An overview of methods, issues, and perspectives. Wiley Interdisciplinary Reviews: Climate Change, 9(5), e535, https://doi.org/10.1002/wcc.535

Chang, Y.-S., Rosati, A. J., Zhang, S., and Harrison, M. J. (2009). Objective Analysis of Monthly Temperature and Salinity for the World Ocean in the 21st century: Comparison with World Ocean Atlas and Application to Assimilation Validation. Journal of Geophysical Research: Oceans, 114(C2), https://doi.org/10.1029/2008JC004970

Crocker, R., Maksymczuk, J., Mittermaier, M., Tonani, M., and Pequignet, C. (2020). An approach to the verification of high-resolution ocean models using spatial methods. Ocean Science, 16, 831-845, https://doi.org/10.5194/os-16-831-2020

Crosnier, L., and Le Provost, C. (2007). Inter-comparing five forecast operational systems in the North Atlantic and Mediterranean basins: The MERSEA-strand1 Methodology. Journal of Marine Systems, 65(1-4), 354-375, https://doi.org/10.1016/j.jmarsys.2005.01.003

Cummings, J., Bertino, L., Brasseur, P., Fukumori, I., Kamachi, M., Martin, M.J., Mogensen, K., Oke, P., Testut, C.-E., Verron, J., Weaver, A. (2009). Ocean data assimilation systems for GODAE. Oceanography, 22, 96-109, https://doi.org/10.5670/oceanog.2009.69

Dai, A., and Trenberth, K. E. (2002). Estimates of freshwater discharge from continents: Latitudinal and seasonal variations. Journal of Hydrometeorology, 3(6), 660-687, https://doi.org/10.1175/1525-7541(2002)003<0660:EOFDFC>2.0.CO;2

Dai, A., Qian, T., Trenberth, K. E., Milliman, J. D. (2009). Changes in continental freshwater discharge from 1948-2004. Journal of Climate, 22(10), 2773-2791, https://doi.org/10.1175/2008JCLI2592.1

Dai, A. (2021). Hydroclimatic trends during 1950–2018 over global land. Climate Dynamics, 4027-4049, https://doi.org/10.1007/s00382-021-05684-1

De Mey, P. (1997). Data assimilation at the oceanic mesoscale: A review. Journal of Meteorological of Japan, 75(1b), 415-427, https://doi.org/10.2151/jmsj1965.75.1B_415

Carrassi, A., Bocquet, M., Bertino, L., Evensen, G. (2018). Data assimilation in the geosciences: An overview of methods, issues, and perspectives. Wiley Interdisciplinary Reviews: Climate Change, 9(5), e535, https://doi.org/10.1002/wcc.535

Donlon, C, Minnett, P., Gentemann, C., Nightingale, T.J., Barton, I., Ward, B., Murray, M. (2002). Toward improved validation of satellite sea surface skin temperature measurements for climate research. Journal of Climate, 15:353-369, https://doi.org/10.1175/1520-0442(2002)015<0353:TIVOSS>2.0.CO;2

Donlon, C., Casey, K., Robinson, I., Gentemann, C., Reynolds, R, Barton, I., Arino, O., Stark, J., Rayner, N., Le Borgne, P., Poulter, D., Vazquez-Cuervo, J., Armstrong, E., Beggs, H., Llewellyn-Jones, D., Minnett, P., Merchant, C., Evans, R. (2009). The GODAE High-Resolution Sea Surface Temperature Pilot Project. Oceanography, 22, 34-45, https://doi.org/10.5670/oceanog.2009.64

Drévillon, M., Greiner, E., Paradis, D., et al. (2013). A strategy for producing refined currents in the Equatorial Atlantic in the context of the search of the AF447 wreckage. Ocean Dynamics, 63, 63-82, https://doi.org/10.1007/s10236-012-0580-2

Eastwood, S., Le Borgne, P., Péré, S., Poulter, D. (2011). Diurnal variability in sea surface temperature in the Arctic. Remote Sensing of Environment, 115(10), 2594-2602, https://doi.org/10.1016/j.rse.2011.05.015

GHRSST Science Team (2012). The Recommended GHRSST Data Specification (GDS) 2.0, document revision 5, available from the GHRSST International Project Office, available at https://www.ghrsst.org/governance-documents/ghrsst-data-processing-specification-2-0-revision-5/

Griffies, S. M. (2006). Some ocean model fundamentals. In “Ocean Weather Forecasting”, Editoris: E. P. Chassignet and J. Verron, 19-73, Springer-Verlag, Dordrecht, The Netherlands, https://doi.org/10.1007/1-4020-4028-8_2

Groom, S., Sathyendranath, S., Ban, Y,. Bernard, S., Brewin, R., Brotas, V., Brockmann, C., Chauhan, P., Choi, J-K., Chuprin, A., Ciavatta, S., Cipollini, P. Donlon, C., Franz, B., He, X., Hirata, T., Jackson, T., Kampel, M., Krasemann, H., Lavender, S., Pardo-Martinez, S., Mélin, F., Platt, T., Santoleri, R., Skakala, J., Schaeffer, B., Smith, M., Steinmetz, F., Valente, A., Wang, M. (2019). Satellite Ocean Colour: Current Status and Future Perspective. Frontiers in Marine Science, 6:485, https://doi.org/10.3389/fmars.2019.00485

Hamlington, B.D., Thompson, P.,. Hammond, W.C., Blewitt, G., Ray, R.D. (2016). Assessing the impact of vertical land motion on twentieth century global mean sea level estimates. Journal of Geophysical Research, 121(7), 4980-4993, https://doi.org/10.1002/2016JC011747

Hernandez, F., Bertino, L., Brassington, G.B., Chassignet, E., Cummings, J., Davidson, F., Drevillon, M., Garric, G., Kamachi, M., Lellouche, J.-M., et al. (2009). Validation and intercomparison studies within GODAE. Oceanography, 22(3), 128-143, https://doi.org/10.5670/oceanog.2009.71

Hernandez, F., Blockley, E., Brassington, G B., Davidson, F., Divakaran, P., Drévillon, M., Ishizaki, S., Garcia-Sotillo, M., Hogan, P. J., Lagemaa, P., Levier, B., Martin, M., Mehra, A., Mooers, C., Ferry, N., Ryan, A., Regnier, C., Sellar, A., Smith, G. C., Sofianos, S., Spindler, T., Volpe, G., Wilkin, J., Zaron, E D., Zhang, A. (2015). Recent progress in performance evaluations and near real-time assessment of operational ocean products. Journal of Operational Oceanography, 8(sup2), https://doi.org/10.1080/1755876X.2015.1050282

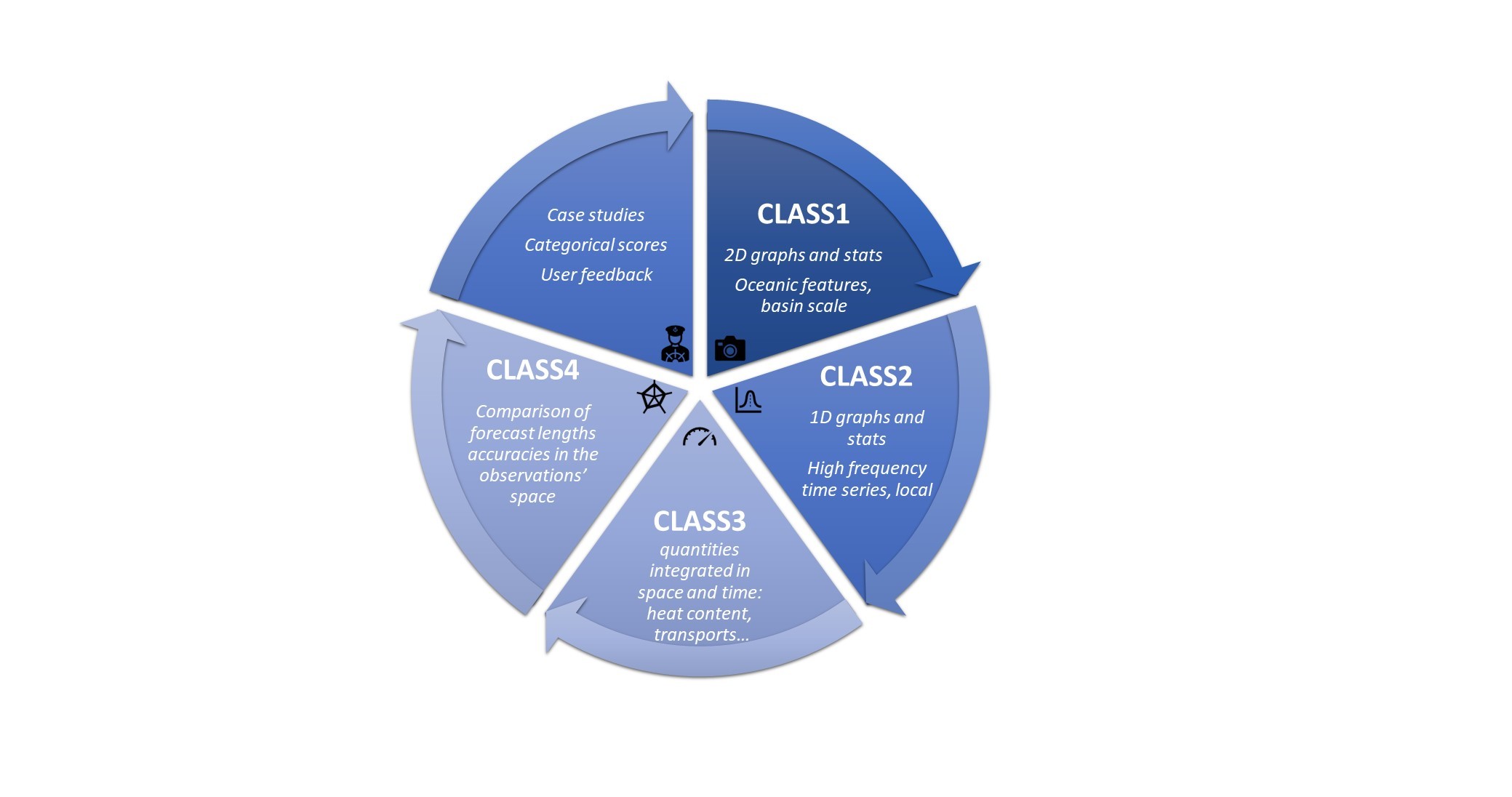

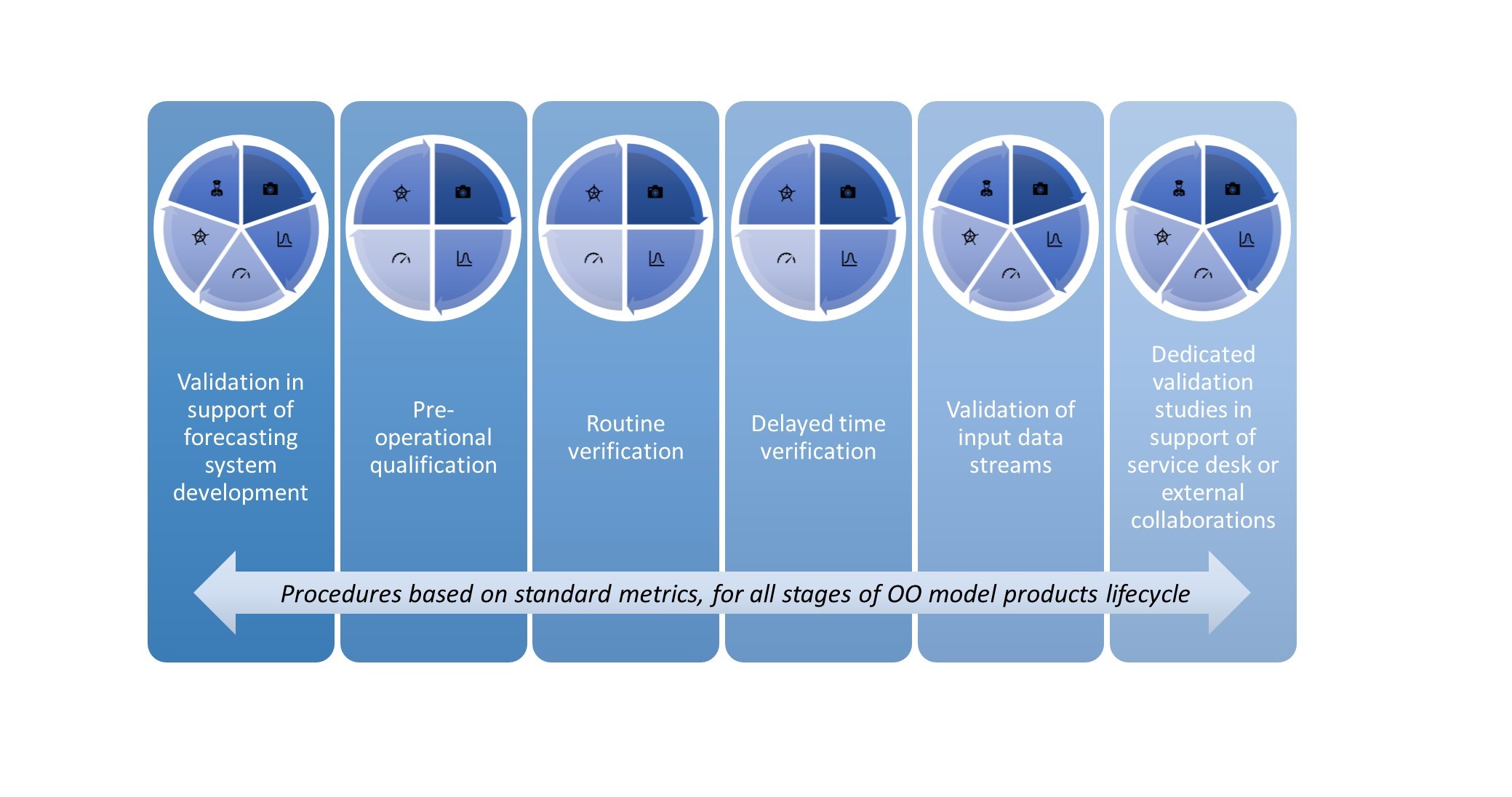

Hernandez, F., Smith, G., Baetens, K., Cossarini, G., Garcia-Hermosa, I., Drevillon, M., ... and von Schuckman, K. (2018). Measuring performances, skill and accuracy in operational oceanography: New challenges and approaches. In “New Frontiers in Operational Oceanography”, Editors: E. Chassignet, A. Pascual, J. Tintoré, and J. Verron, GODAE OceanView, 759-796, https://doi.org/10.17125/gov2018.ch29

Hollingsworth, A., Shaw, D. B., Lönnberg, P., Illari, L., Arpe, K., and Simmons, A. J. (1986). Monitoring of Observation and Analysis Quality by a Data Assimilation System. Monthly Weather Review, 114(5), 861-879, https://doi.org/10.1175/1520-0493(1986)114<0861:MOOAAQ>2.0.CO;2

International Altimetry Team (2021). Altimetry for the future: building on 25 years of progress. Advances in Space Research, 68(2), 319-363, https://doi.org/10.1016/j.asr.2021.01.022

Kurihara, Y., Murakami, H., and Kachi, M. (2016). Sea surface temperature from the new Japanese geostationary meteorological Himawari-8 satellite. Geophysical Research Letters, 43(3), 1234-1240, https://doi.org/10.1002/2015GL067159

Josey, S.A., Kent, E.C., Taylor, P.K. (1999). New insights into the ocean heat budget closure problem from analysis of the SOC air-sea flux climatology. Journal of Climate, 12(9), 2856-2880, https://doi.org/10.1175/1520-0442(1999)012<2856:NIITOH>2.0.CO;2

Jolliffe, I.T., and Stephenson, D.B. (2003). Forecast Verification: A Practitioner’s Guide in Atmospheric Sciences, John Wiley & Sons Ltd., Hoboken, 296 pages, ISBN: 978-0-470-66071-3

Legates, D.R., McCabe, G.J. Jr. (1999). Evaluating the use of “Goodness of Fit” measures in hydrologic and hydroclimatic model validation. Water Resources Research, 35, 233-241, https://doi.org/10.1029/1998WR900018

Legates, D., and Mccabe, G. (2013). A refined index of model performance: A rejoinder. International Journal of Climatology, 33(4), 1053-1056, https://doi.org/10.1002/joc.3487

Le Traon, P.Y., Larnicol, G., Guinehut, S., Pouliquen, S., Bentamy, A., Roemmich, D., Donlon, C., Roquet, H., Jacobs, G., Griffin, D., Bonjean, F., Hoepffner, N., Breivik, L.A. (2009). Data assembly and processing for operational oceanography 10 years of achievements. Oceanography, 22(3), 56-69, https://doi.org/10.5670/oceanog.2009.66

Levitus, S. (1982). Climatological Atlas of the World Ocean. NOAA/ERL GFDL Professional Paper 13, Princeton, N.J., 173 pp. (NTIS PB83-184093). Lindstrom, E., Gunn, J., Fischer, A., McCurdy, A. and Glover L.K. (2012). A Framework for Ocean Observing. IOC Information Document;1284, Rev. 2, https://doi.org/10.5270/OceanObs09-FOO

Maksymczuk J., Hernandez, F., Sellar, A., Baetens, K., Drevillon, M., Mahdon, R., Levier, B., Regnier, C., Ryan, A. (2016). Product Quality Achievements Within MyOcean. Mercator Ocean Journal #54. Available at https://www.mercator-ocean.eu/en/ocean-science/scientific-publications/mercator-ocean-journal/newsletter-54-focusing-on-the-main-outcomes-of-the-myocean2-and-follon-on-projects/

Mantovani C., Corgnati, L., Horstmann, J. Rubio, A., Reyes, E., Quentin, C., Cosoli, S., Asensio, J. L., Mader, J., Griffa, A. (2020). Best Practices on High Frequency Radar Deployment and Operation for Ocean Current Measurement. Frontiers in Marine Science, 7:210, https://doi.org/10.3389/fmars.2020.00210

Marks, K., and Smith, W. (2006). An Evaluation of Publicly Available Global Bathymetry Grids. Marine Geophysical Researches, 27, 19-34, https://doi.org/10.1007/s11001-005-2095-4

Martin, M.J. (2016). Suitability of satellite sea surface salinity data for use in assessing and correcting ocean forecasts. Remote Sensing of Environment, 180, 305-319. https://doi.org/10.1016/j.rse.2016.02.004

Martin, M.J, King, R.R., While, J., Aguiar, A.B. (2019). Assimilating satellite sea-surface salinity data for SMOS Aquarius and SMAP into a global ocean forecasting system. Quarterly Journal of the Royal Meteorological Society, 145(719), 705-726, https://doi.org/10.1002/qj.3461

McPhaden, M. J., Busalacchi, A.J., Cheney, R., Donguy, J.R., Gage, K.S., Halpern, D., Ji, M., Julian, P., Meyers, G., Mitchum, G.T., Niller, P.P., Picaut, J., Reynolds, R.W., Smith, N., Takeuchi, K. (1998). The Tropical Ocean-Global Atmosphere (TOGA) observing system: A decade of progress. Journal of Geophysical Research, 103, 14169-14240, https://doi.org/10.1029/97JC02906

McPhaden, M. J., Meyers, G., Ando, K., Masumoto, Y., Murty, V. S., Ravichandran, M., Syamsudin, F., Vialard, J., Yu, L., and Yu, W. (2009). RAMA: The Research Moored Array for African-Asia-Australian Monsoon Analysis and Prediction. Bulletin of the American Meteorological Society, 90, 459-480, https://doi.org/10.1175/2008BAMS2608.1

Moore, A.M., Martin, M.J., Akella, S., Arango, H.G., Balmaseda, M., Bertino, L., Ciavatta, S., Cornuelle, B., Cummings, J., Frolov, S., Lermusiaux, P., Oddo, P., Oke, P.R., Storto, A., Teruzzi, A., Vidard, A., Weaver, A.T. (2019). Synthesis of Ocean Observations Using Data Assimilation for Operational, Real-Time and Reanalysis Systems: A More Complete Picture of the State of the Ocean. Frontiers in Marine Science, 6:90, https://doi.org/10.3389/fmars.2019.00090

Naeije, M., Schrama, E., and Scharroo, R. (2000). The Radar Altimeter Database System project RADS. Published in “IGARSS 2000. IEEE 2000 International Geoscience and Remote Sensing Symposium. Taking the Pulse of the Planet: The Role of Remote Sensing in Managing the Environment”, doi:10.1109/ IGARSS.2000.861605

Nurmi P. (2003). Recommendations on the verification of local weather forecasts (at ECMWF member states). Consultancy report to ECMWF Operations Department. Available at https://www.cawcr.gov.au/projects/verification/Rec_FIN_Oct.pdf

O’Carroll, A.G., Armstrong, E.M., Beggs, H., Bouali, M., Casey, K.S., Corlett, G.K., Dash, P., Donlon, C.J., Gentemann, C.L., Hoyer, J.L., Ignatov, A., Kabobah, K., Kachi, M. Kurihara, Y., Karagali, I., Maturi, E., Merchant, C.J., Minnett, P., Pennybacker, M., Ramakrishnan, B., Ramsankaran, R., Santoleri, R., Sunder, S., Saux Picart, S., Vazquez-Cuervo, J., Wimmer, W. (2019). Observational needs of sea surface temperature, Frontiers in Marine Science, 6:420, https://doi.org/10.3389/fmars.2019.00420

Peng, G., Downs, R.R., Lacagnina, C., Ramapriyan, H., Ivánová, I., Moroni, D., Wei, Y., Larnicol, G., Wyborn, L., Goldberg, M., Schulz, J., Bastrakova, I., Ganske, A., Bastin, L., Khalsa, S.J.S., Wu, M., Shie, C.-L., Ritchey, N., Jones, D., Habermann, T., Lief, C., Maggio, I., Albani, M., Stall, S., Zhou, L., Drévillon, M., Champion, S., Hou, C.S., Doblas-Reyes, F., Lehnert, K., Robinson, E. and Bugbee, K., (2021). Call to Action for Global Access to and Harmonization of Quality Information of Individual Earth Science Datasets. Data Science Journal, 20(1), 19, http://doi.org/10.5334/dsj-2021-019

Petrenko, B., Ignatov, A., Kihai, Y., Dash, P. (2016). Sensor-Specific Error Statistics for SST in the Advanced Clear-Sky Processor for Oceans. Journal of Atmospheric and Oceanic Technology, 33(2), 345-359, https://doi.org/10.1175/JTECH-D-15-0166.1

Roarty, H., Cook, T., Hazard, L., George, D., Harlan, J., Cosoli, S., Wyatt, L., Alvarez Fanjul, E., Terrill, E., Otero, M., Largier, J., Glenn, S., Ebuchi, N., Whitehouse, Br., Bartlett, K., Mader, J., Rubio, A., Corgnati, L., Mantovani, C., Griffa, A., Reyes, E., Lorente, P., Flores-Vidal, X., Saavedra-Matta, K. J., Rogowski, P., Prukpitikul, S., Lee, S-H., Lai, J-W., Guerin, C-A., Sanchez, J., Hansen, B., Grilli, S. (2019). The Global High Frequency Radar Network. Frontiers in Marine Science, 6:164, https://doi.org/10.3389/fmars.2019.00164

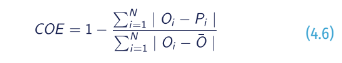

Ryan, A. G., Regnier, C., Divakaran, P., Spindler, T., Mehra, A., Smith, G. C., et al. (2015). GODAE OceanView Class 4 forecast verification framework: global ocean inter-comparison. Journal of Operational Oceanography, 8(sup1), S112-S126, https://doi.org/10.1080/1755876X.2015.1022330

Scharroo, R. (2012). RADS version 3.1: User Manual and Format Specification. Available at http://rads.tudelft.nl/rads/radsmanual.pdf

Sotillo, M. G., Garcia-Hermosa, I., Drévillon, M., Régnier, C., Szczypta, C., Hernandez, F., Melet, A., Le Traon, P.Y. (2021). Communicating CMEMS Product Quality: evolution & achievements along Copernicus-1 (2015- 2021). Mercator Ocean Journal #57. Available at https://marine.copernicus.eu/news/copernicus-1-marine-service-achievements-2015-2021

Tonani, M., Balmaseda, M., Bertino, L., Blockley, E., Brassington, G., Davidson, F., Drillet, Y., Hogan, P., Kuragano, T., Lee, T., Mehra, A., Paranathara, F., Tanajura, C., Wang, H. (2015). Status and future of global and regional ocean prediction systems. Journal of Operational Oceanography, 8, s201-s220, https://doi.org/10.1080/1755876X.2015.1049892

Tournadre, J., Bouhier, N., Girard-Ardhuin, F., Remy, F. (2015). Large icebergs characteristics from altimeter waveforms analysis. Journal of Geophysical Research: Oceans, 120(3), 1954-1974, https://doi.org/10.1002/2014JC010502

Tozer, B., Sandwell, D.T., Smith, W.H.F., Olson, C., Beale, J. R., and Wessel, P. (2019). Global bathymetry and topography at 15 arc sec: SRTM15+. Earth and Space Science, 6, 1847-1864, https://doi.org/10.1029/2019EA000658

Vinogradova, N.T., Ponte, R.M., Fukumori, I., and Wang, O. (2014). Estimating satellite salinity errors for assimilation of Aquarius and SMOS data into climate models. Journal of Geophysical Research: Oceans, 119(8), 4732-4744, https://doi.org/10.1002/2014JC009906

Vinogradova N., Lee, T., Boutin, J., Drushka, K., Fournier, S., Sabia, R., Stammer, D., Bayler, E., Reul, N., Gordon, A., Melnichenko, O., Li, L., Hackert, E., Martin, M., Kolodziejczyk, N., Hasson, A., Brown, S., Misra, S., and Linkstrom, E. (2019). Satellite Salinity Observing System: Recent Discoveries and the Way Forward. Frontiers in Marine Science, 6:243, https://doi.org/10.3389/fmars.2019.00243

Zhang H., Beggs, H., Wang, X.H., Kiss, A. E., Griffin, C. (2016). Seasonal patterns of SST diurnal variation over the Tropical Warm Pool region. Journal of Geophysical Research: Oceans, 121(11), 8077-8094, https://doi.org/10.1002/2016JC012210

Follow us